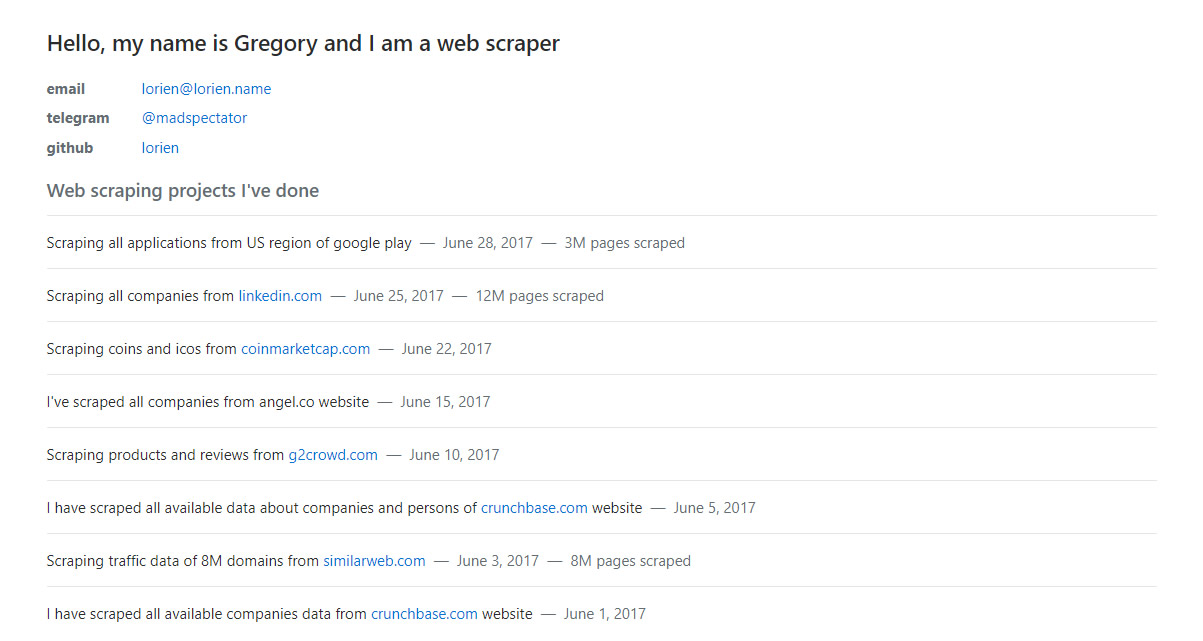

Hello, my name is Gregory and I am a web scraper

telegram

github

Web scraping projects I've done

Scraping all applications from US region of google play — June 28, 2017 — 3M pages scraped

Scraping all companies from linkedin.com — June 25, 2017 — 12M pages scraped

Scraping coins and icos from coinmarketcap.com — June 22, 2017

I've scraped all companies from angel.co website — June 15, 2017

Scraping products and reviews from g2crowd.com — June 10, 2017

I have scraped all available data about companies and persons of crunchbase.com website — June 5, 2017

Scraping traffic data of 8M domains from similarweb.com — June 3, 2017 — 8M pages scraped

I have scraped all available companies data from crunchbase.com website — June 1, 2017

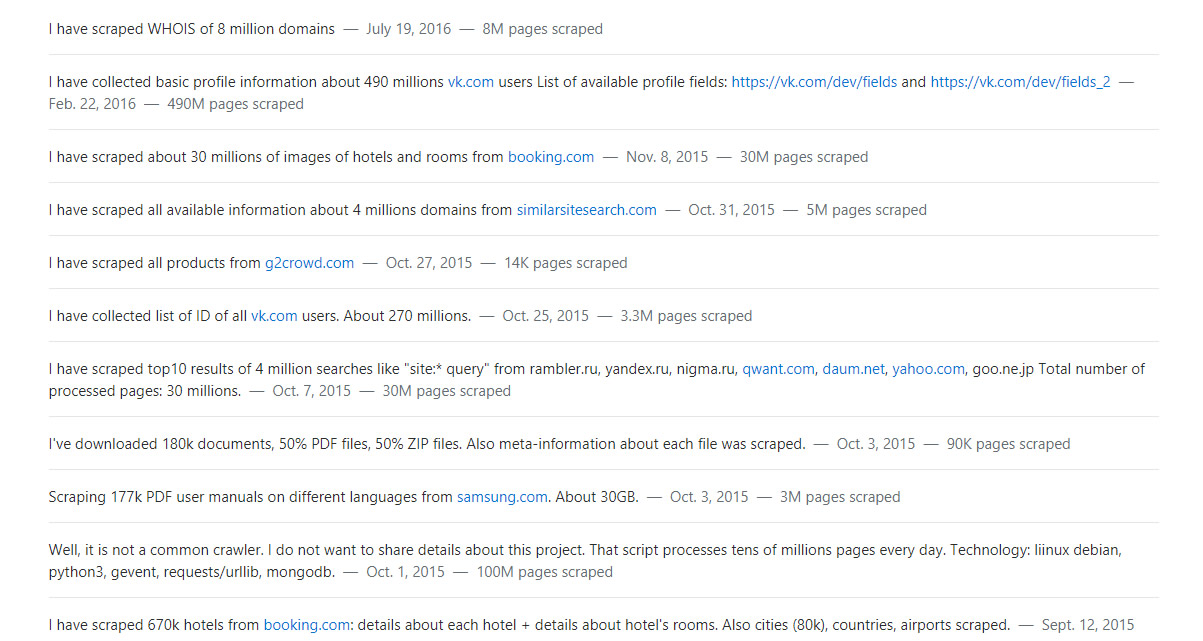

I have scraped WHOIS of 8 million domains — July 19, 2016 — 8M pages scraped

I have collected basic profile information about 490 millions vk.com users List of available profile fields: https://vk.com/dev/fields and https://vk.com/dev/fields_2 — Feb. 22, 2016 — 490M pages scraped

I have scraped about 30 millions of images of hotels and rooms from booking.com — Nov. 8, 2015 — 30M pages scraped

I have scraped all available information about 4 millions domains from similarsitesearch.com — Oct. 31, 2015 — 5M pages scraped

I have scraped all products from g2crowd.com — Oct. 27, 2015 — 14K pages scraped

I have collected list of ID of all vk.com users. About 270 millions. — Oct. 25, 2015 — 3.3M pages scraped

I have scraped top10 results of 4 million searches like "site:* query" from rambler.ru, yandex.ru, nigma.ru, qwant.com, daum.net, yahoo.com, goo.ne.jp Total number of processed pages: 30 millions. — Oct. 7, 2015 — 30M pages scraped

I've downloaded 180k documents, 50% PDF files, 50% ZIP files. Also meta-information about each file was scraped. — Oct. 3, 2015 — 90K pages scraped

Scraping 177k PDF user manuals on different languages from samsung.com. About 30GB. — Oct. 3, 2015 — 3M pages scraped

Well, it is not a common crawler. I do not want to share details about this project. That script processes tens of millions pages every day. Technology: liinux debian, python3, gevent, requests/urllib, mongodb. — Oct. 1, 2015 — 100M pages scraped

I have scraped 670k hotels from booking.com: details about each hotel + details about hotel's rooms. Also cities (80k), countries, airports scraped. — Sept. 12, 2015 — 1000K pages scraped

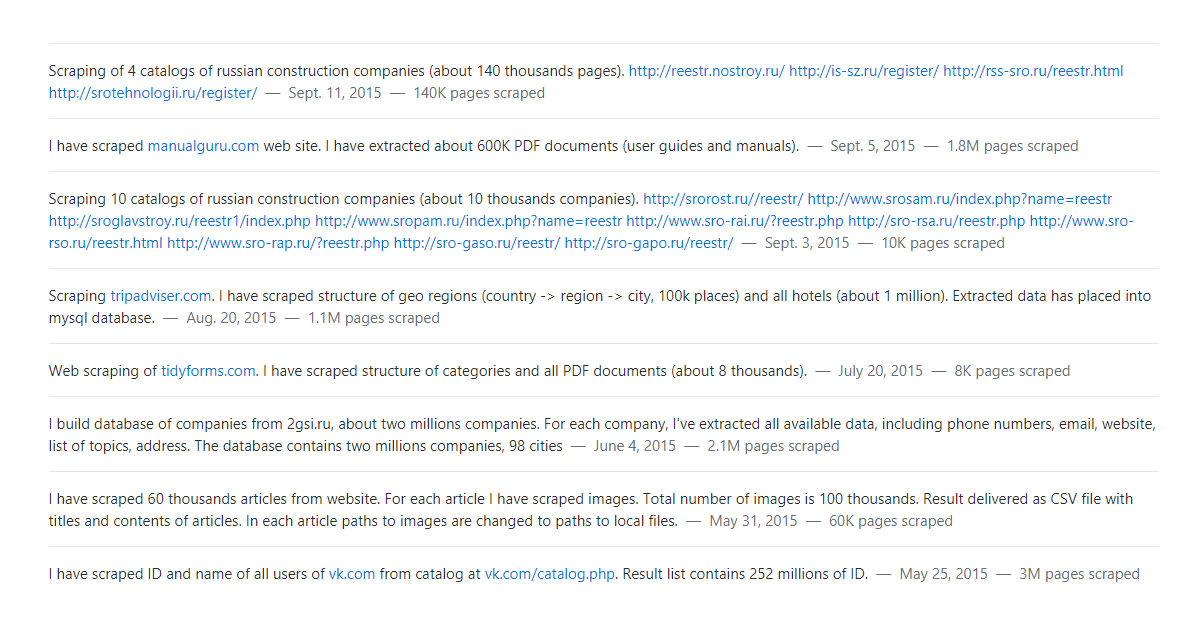

Scraping of 4 catalogs of russian construction companies (about 140 thousands pages). http://reestr.nostroy.ru/ http://is-sz.ru/register/ http://rss-sro.ru/reestr.htmlhttp://srotehnologii.ru/register/ — Sept. 11, 2015 — 140K pages scraped

I have scraped manualguru.com web site. I have extracted about 600K PDF documents (user guides and manuals). — Sept. 5, 2015 — 1.8M pages scraped

Scraping 10 catalogs of russian construction companies (about 10 thousands companies). http://srorost.ru//reestr/ http://www.srosam.ru/index.php?name=reestrhttp://sroglavstroy.ru/reestr1/index.php http://www.sropam.ru/index.php?name=reestr http://www.sro-rai.ru/?reestr.php http://sro-rsa.ru/reestr.php http://www.sro-rso.ru/reestr.html http://www.sro-rap.ru/?reestr.php http://sro-gaso.ru/reestr/ http://sro-gapo.ru/reestr/ — Sept. 3, 2015 — 10K pages scraped

Scraping tripadviser.com. I have scraped structure of geo regions (country -> region -> city, 100k places) and all hotels (about 1 million). Extracted data has placed into mysql database. — Aug. 20, 2015 — 1.1M pages scraped

Web scraping of tidyforms.com. I have scraped structure of categories and all PDF documents (about 8 thousands). — July 20, 2015 — 8K pages scraped

I build database of companies from 2gsi.ru, about two millions companies. For each company, I've extracted all available data, including phone numbers, email, website, list of topics, address. The database contains two millions companies, 98 cities — June 4, 2015 — 2.1M pages scraped

I have scraped 60 thousands articles from website. For each article I have scraped images. Total number of images is 100 thousands. Result delivered as CSV file with titles and contents of articles. In each article paths to images are changed to paths to local files. — May 31, 2015 — 60K pages scraped

I have scraped ID and name of all users of vk.com from catalog at vk.com/catalog.php. Result list contains 252 millions of ID. — May 25, 2015 — 3M pages scraped

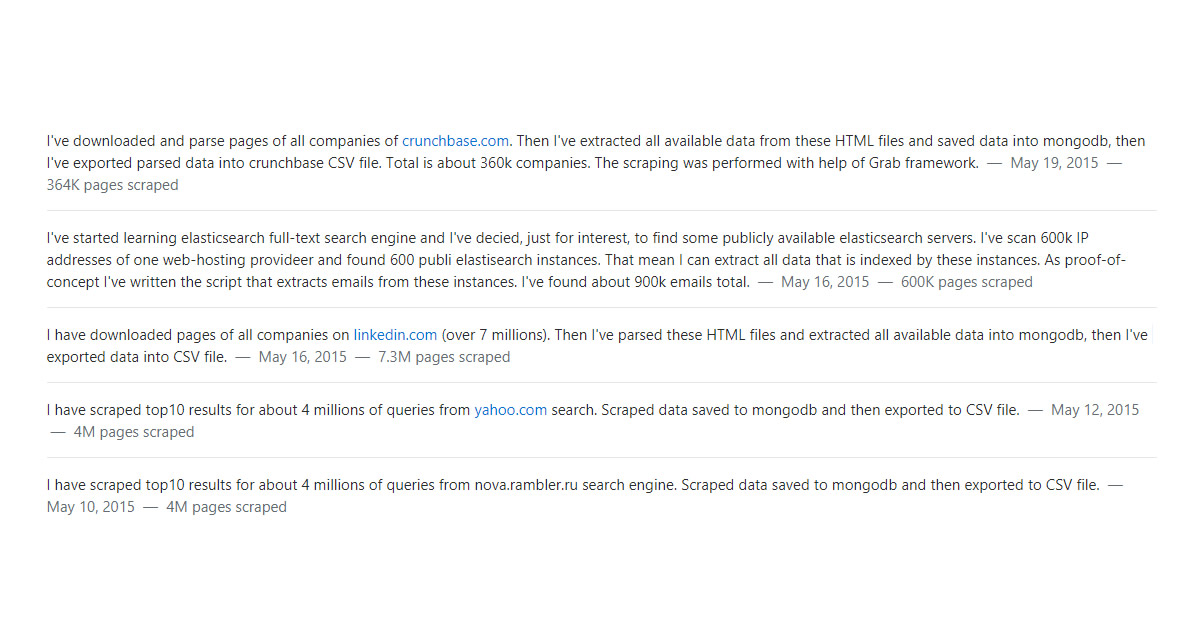

I've downloaded and parse pages of all companies of crunchbase.com. Then I've extracted all available data from these HTML files and saved data into mongodb, then I've exported parsed data into crunchbase CSV file. Total is about 360k companies. The scraping was performed with help of Grab framework. — May 19, 2015 — 364K pages scraped

I've started learning elasticsearch full-text search engine and I've decied, just for interest, to find some publicly available elasticsearch servers. I've scan 600k IP addresses of one web-hosting provideer and found 600 publi elastisearch instances. That mean I can extract all data that is indexed by these instances. As proof-of-concept I've written the script that extracts emails from these instances. I've found about 900k emails total. — May 16, 2015 — 600K pages scraped

I have downloaded pages of all companies on linkedin.com (over 7 millions). Then I've parsed these HTML files and extracted all available data into mongodb, then I've exported data into CSV file. — May 16, 2015 — 7.3M pages scraped

I have scraped top10 results for about 4 millions of queries from yahoo.com search. Scraped data saved to mongodb and then exported to CSV file. — May 12, 2015 — 4M pages scraped

I have scraped top10 results for about 4 millions of queries from nova.rambler.ru search engine. Scraped data saved to mongodb and then exported to CSV file. — May 10, 2015 — 4M pages scraped

I have scraped search results for about 4 millions of queries from baidu.com search engine. Scraped data saved to mongodb and then exported to CSV file. Search results from baid.com does not provide direct link to external resource. So I wrote the script that follows the internal baidu link to find real URL of external web resource. — May 8, 2015 — 4M pages scraped

I was given number of keywords. With vk API I've found groups in vkontakte contain these keywords in their names. Then I've extracted data about members in these groups. — May 1, 2015 — 100K pages scraped

I've developed MOOC courses aggregator http://oed.io The project consists of two parts: aggregation script and website to brawse/search collected data. Aggregation script periodically collects courses from a number of major MOOC providers: alison, coursera, edx, futurelearn, iversity, open2study, openlearning, udacity, udemy. Found courses are saved to database. Website allow to browse found courses and search for courses by category or search query. — April 18, 2015 — 50K pages scraped

I have scraped all news sources that are used by alltop.com to show popular news. Then I've scraped home page of each found website and extracted URLs of RSS feeds. Resulted data exported to CSV file.R — Feb. 19, 2015 — 30K pages scraped